This article explains how to sensitively and carefully limit your Home Assistant’s database growth, without losing any valuable data. And because data is the new oil, pretty much all of the data is valuable. Who knows when you need to know whether your basement door was open or closed 1 year and 11 months from now?

Most people have to tackle this issue with their Home Assistant instance, unless they’re running with the default settings – when recorded will purge everything (but the LTS data) after 10 days.

But I don’t accept the defaults. And besides, my Home Assistant is running on proper hardware, so it can handle some I/O and I don’t really have a need to limit the database size.

Or so I thought…

Background

When I got started with Home Assistant, I immediately started configuring automations for heating and cooling, and for that I of course needed some temperature data too. And not only current and forecast data, but some historical data as well, as I wanted to be able to track how well my automation was working in different weather conditions.

So while I configured my recorder to have a custom database location, I also set the purge delay to be a bit longer than the default 10 days.

“How much longer”, you ask?

Well… Here’s my config for defining the database location and setting the purge delay:

# Change database location:

# https://www.home-assistant.io/integrations/recorder/

recorder:

db_url: sqlite:////db/home-assistant_v2.db

purge_keep_days: 720I’d like to direct your attention to the line that says: purge_keep_days: 720

Ah, yes. 720 days sounds totally sensible. Especially for something that, when configured through the UI, has a maximum value of 360!

When testing, I realized for my sensors that didn’t have long-term-statistics enabled, I was now storing 12-24 months of data. Which is of course a lot, but not as much as I wanted – and it’s also in much more detail than I’d ever need! For sensors that did support LTS, the amount of data seemed much less consistent.

Admittedly, I didn’t really understand long-term statistics. So I dove in to investigate and learn.

Understanding LTS in Home Assistant

LTS – Long-Term Statistics – in Home Assistant refers to the extended retention of detailed historical data for your entities. It allows users to analyze and visualize long-term trends and patterns in their data, which is particularly useful for monitoring energy usage, temperature changes, and other metrics over extended periods.

But how long will long-term statistics stay in home assistant?

Long-term statistics in Home Assistant are designed to be retained indefinitely. Unlike short-term statistics, which are purged after a default period of 10 days, long-term statistics are never purged. This means that they will continue to be stored and available for as long as you use Home Assistant. Nice.

Fixing my huge database

The first thing I needed to do to even get started, was to set up tracking for the database size. I already kind of had this, as I take backups of the database roughly once per 24 hours to cloud storage, and my cloud storage provider does provide history for the file, including its size.

However, I wanted to do something more… Home-Assistanty. I wanted to track the database size in Home Assistant.

I know, I know – I’m amassing too much data to my Home Assistant database. And I’m solving that by adding more stuff to my Home Assistant… 😁

Because of course I am.How to unbloat my Home Assistant by adding more stuff to it?

I could find many ways to do this, but the more technical solutions didn’t seem to work. Instead what I did was to add an integration called “SQL”, and add a query like this:

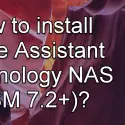

SELECT ROUND(page_count * page_size,1)/1000000 as size FROM pragma_page_count(), pragma_page_size();It looks like this in the UI:

Somehow the default name of the sensor ends up being its assigned the name twice – kinda messed up – but you can fix it through the UI afterwards.

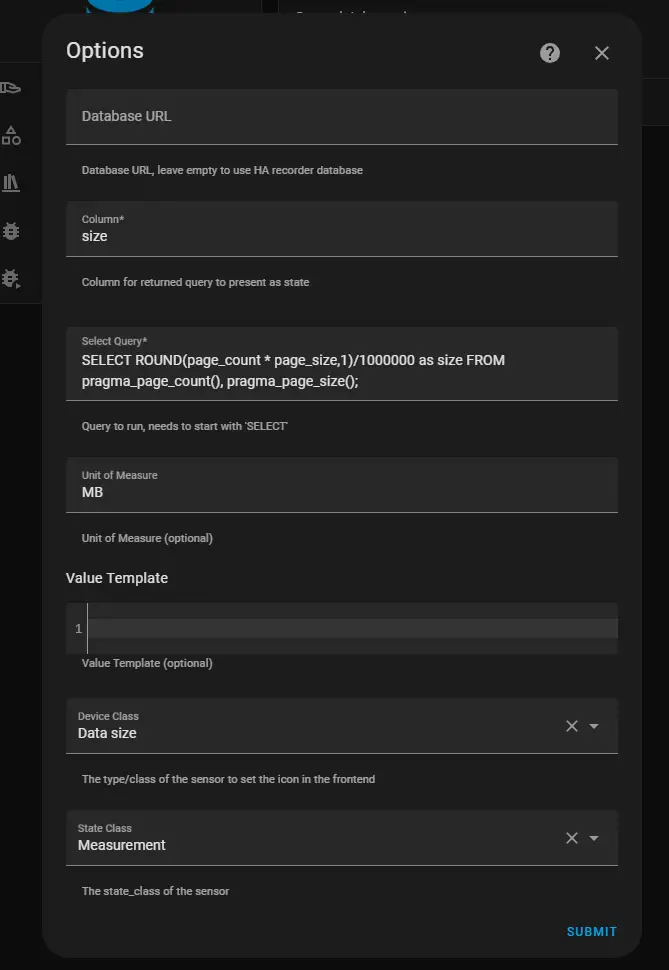

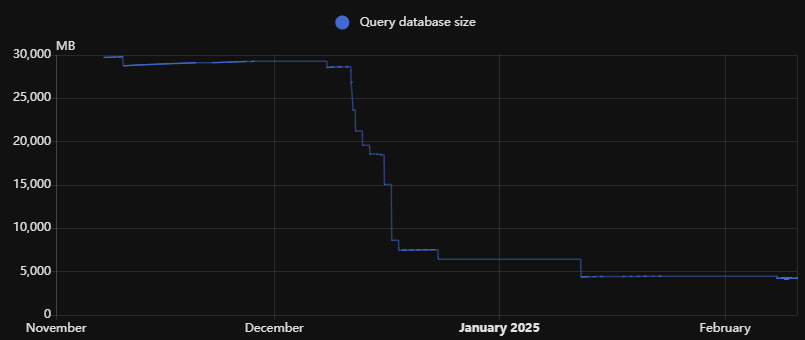

And after a couple of days, I could see my database and the speed at which it’s growing:

Yikes. It’s just 50 megs or so per day, but that’ll add up to 17-18 gigs per year. I need to start taming this.

What am I actually tracking?

I figured I’ll need to start by seeing which entity states do I actually have as tracked entities.

{% for state in states %}

{{ state.entity_id -}} : {{ state.state -}}

{% endfor %}This’ll produce a long list, in all likelihood. And it won’t tell you how much data there is for each.

But it IS still a list that you can go through to immediately see stuff you don’t care for. And I saw a lot of stuff like that.

So the first thing I decided to do was to remove some sensor types that I really don’t use much from even being recorded. It won’t be a big help, but still – it’s something:

recorder:

db_url: sqlite:////db/home-assistant_v2.db

purge_keep_days: 720

exclude:

domains:

# domains to exclude from recording

- updater

- media_player

- weather

- sun

- persistent_notification

entities:

# specific entities to exclude, while the domain may be included

- device_tracker.98_d8_63_34_af_4a

- device_tracker.44_5d_5e_02_22_3e

entity_globs:

# groups of sensors by using a wildcard

- sensor.rain*

- binary_sensor.aimesh_*

- sensor.4g_ac68u*

- update.4g_ac68u*

- button.4g_ac68u_*

- switch.4g_ac68u_*

- device_tracker.esp_*

- device_tracker.lwi*

- sensor.openweathermap_*

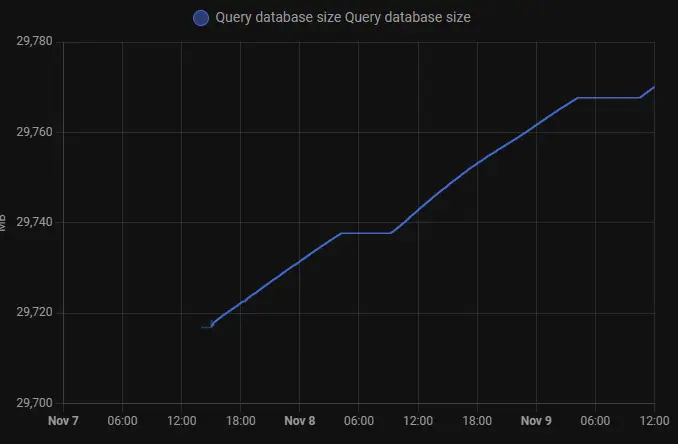

- sensor.climacell_*This… Did something:

Looking into my database sensor after excluding all of those entities, I could see that my database did indeed shrink by about a full gigabyte – that’s by more than 3%!

I mean… The database is still over 28 GBs, and growing. But I did shave off a full gigabyte!

Okay, enough celebration. I’m still logging way too much data.

Limit the amount of history data on my entities

I wanted to investigate what I’m actually storing.

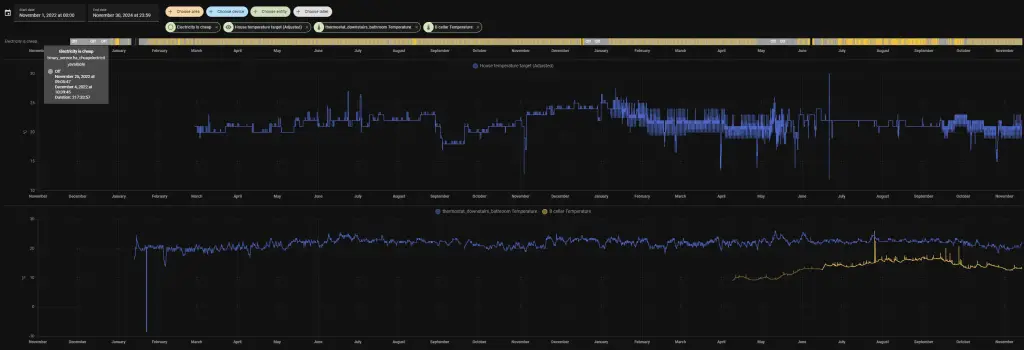

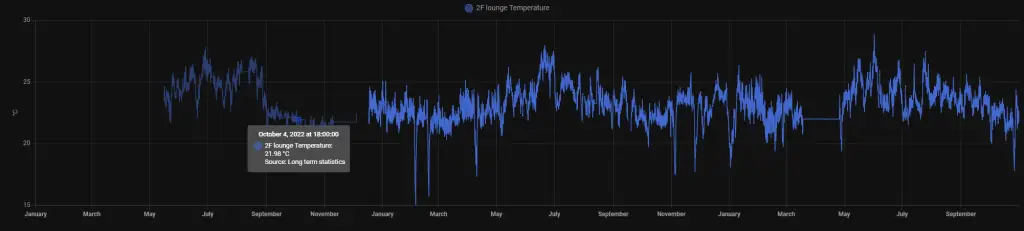

Checking in November 2024, I decided to look back for a bit more than 2 years. Since I had the recorded configuration at 720 days, I was expecting to see almost 2 years (2023+2024 have altogether 731 days) worth of data.

I wasn’t disappointed (although most of my sensors have been configured or created within the last 2 years and didn’t have the full dataset):

This brings us to a bit of a problem, however: the database will keep growing for quite a bit.

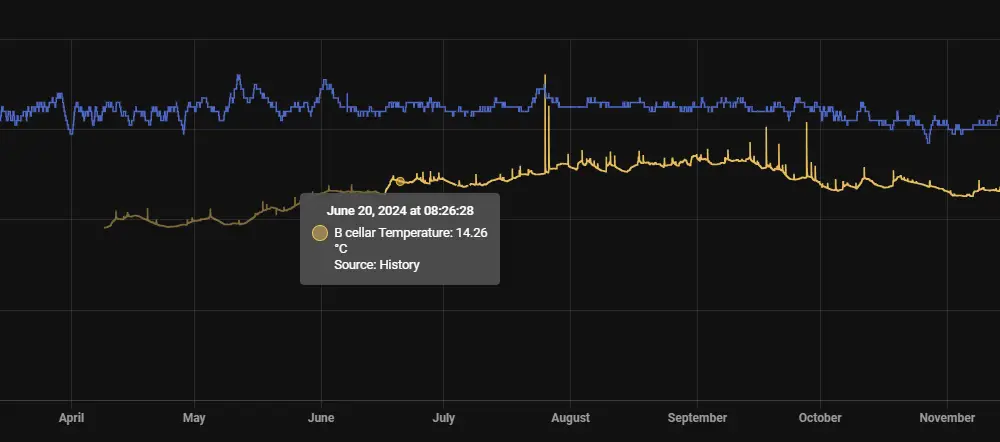

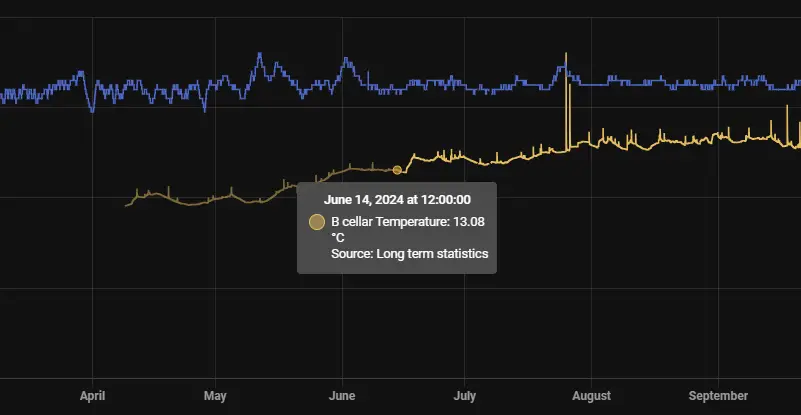

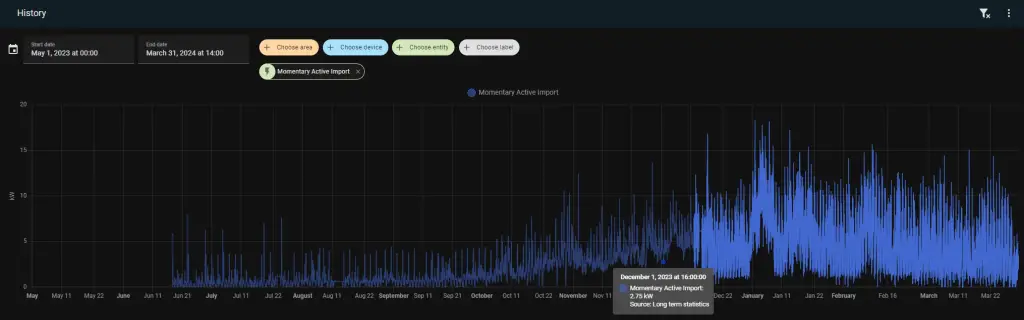

Looking closer to some of my entities, I can see some entities with 2 different shades in the graph.

Brighter stuff comes from Short-term statistics. It’s called “History” in the graphs.

Older stuff changes the data source to be “Long-term statistics”. I don’t have an answer why this sensor transforms this quickly, while most have taken a long while and I had no purge rules beyond the 720 days at this point.

For some longer-term data – one of my first Sonoff hygrometers (temperature / humidity sensors) has been going on since May 2022, and only the oldest stuff is now moved to long-term statistics. Everything until November 2022 is considered history, not long-term statistics. Unlike the sensor above, this one actually seems to adhere to the 720 day limit!

Okay. But I think we can all agree that 2 years is a lot of detailed data to keep in the database. I don’t actually need every single data point over such a long time!

But how do I make sure I can clean up the detailed data, but leave the statistical, distilled data (that’s in Long-term statistics)?

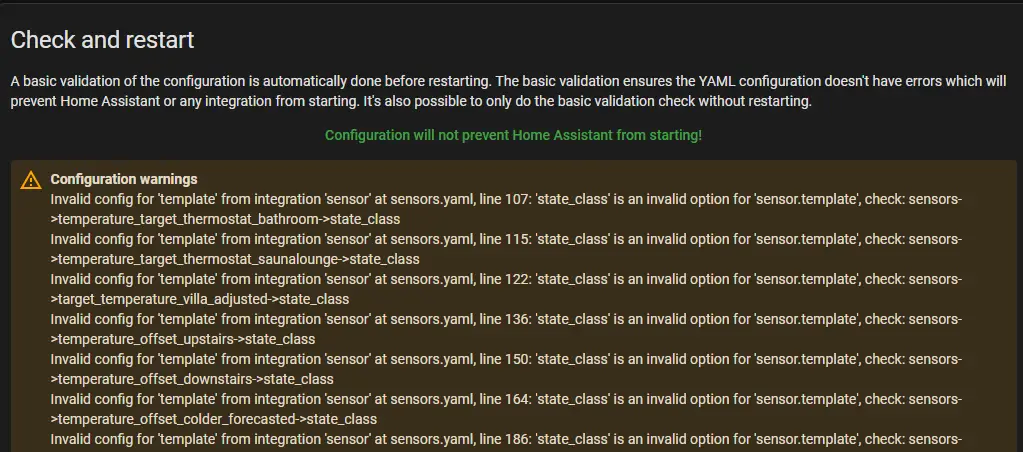

Fixing the state_class for my custom sensors

I like my custom sensors states to be stored in LTS – I use those for a lot of things, after all, and could use some historical data – so I needed to figure out how to do that.

And the right way to do this, apparently, is to add a valid state_class for those entities. Home Assistant’s always helpful documentation states this:

Long-term Statistics Home Assistant has support for storing sensors as long-term statistics if the entity has the right properties. To opt-in for statistics, the sensor must have state_class set to one of the valid state classes: SensorStateClass.MEASUREMENT, SensorStateClass.TOTAL or SensorStateClass.TOTAL_INCREASING. For certain device classes, the unit of the statistics is normalized to for example make it possible to plot several sensors in a single graph. https://developers.home-assistant.io/docs/core/entity/sensor/

However… I was using something called “legacy configuration” mode for my template sensors. I didn’t even know there was a modern configuration available – for the duration of about 3 years, I’ve always set my sensors up the same way. Which, apparently, was the “legacy” way.

Long story short, you can not set state_class for your sensors using the legacy configuration. Your options are to migrate everything to the modern way (manually), or to add the following customization for every single sensor you have:

customize:

sensor.entity_id:

state_class: measurementAdding this to every sensor manually would’ve taken me at least 10 minutes, so of course I opted to do the right thing, and “modernize” the setup instead. That “right” step took me about 4 hours.

… sometimes my own decisions make me question my sanity. But here we are.

Anyway. I got it done. But ran into another question: How do I store my binary_sensors (on/off, true/false, open/closed)? I might need that data some day.

The bad news is that there’s no way to force a binary_sensor to be stored in long-term -statistics. At least not according to documentation or, after a lot of pressing and asking “are you sure about that?”, Copilot. It did offer me 5 different ways that don’t work, and when confronted, became very defensive before eventually admitting there’s no way to store binary_sensor data in LTS.

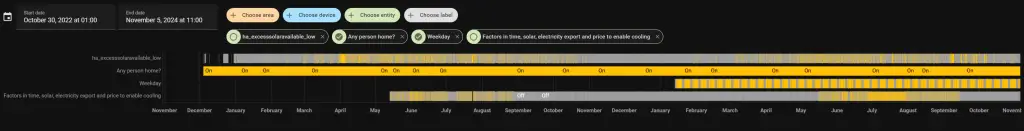

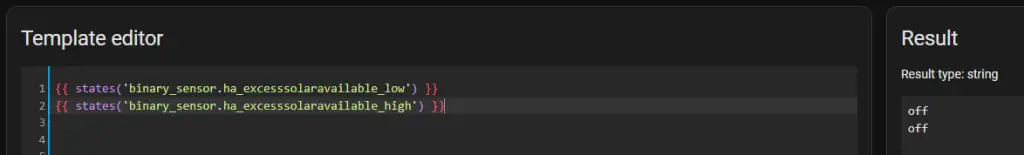

So in order to investigate if the data actually gets dropped, I wanted to see how much data my binary_sensors actually have available in the past.

Well – it’s what I would expect. Up to 720 days for the few entities that have existed for a long enough time.

At the time of writing, 17.12.2022 is exactly 720 days before today.

(Yes, this article was under construction for about 3 months while I was working on reducing the database size!)

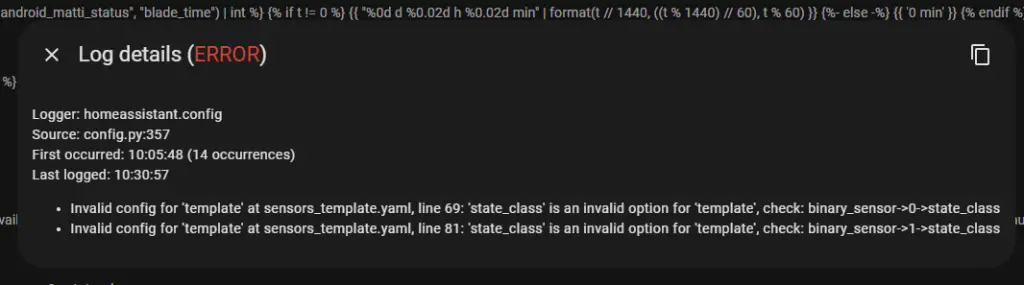

I tried adding state_class to the binary_sensors directly.

Maybe not unexpected – but it didn’t work.

I decided to try an alternative way, and added this to my customize.yaml:

binary_sensor.ha_excesssolaravailable_low:

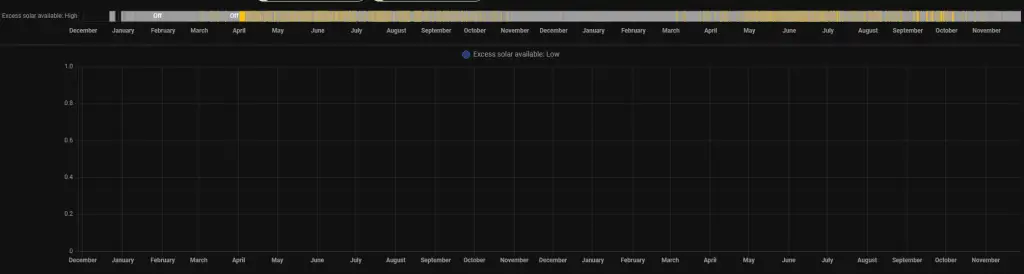

state_class: measurement… and apparently it WORKS, but it breaks the graphs.

Now you can’t see the older values – which was the sole reason why I was trying to save these values for a longer time in the first place!

In theory, you could do that anyway, since the data is rendered properly when you test it in Developer tools:

But I certainly did not want to deal with that headache. I reverted my changes to customize.yaml and accepted that in order to store my binary_sensor values for at least 2 years, I wouldn’t touch the purge_keep_days.

So I needed to find a way to limit the database growth some other way!

Luckily, with Home Assistant – there is a way. You can call the purge Action from an automation, defining what to purge yourself.

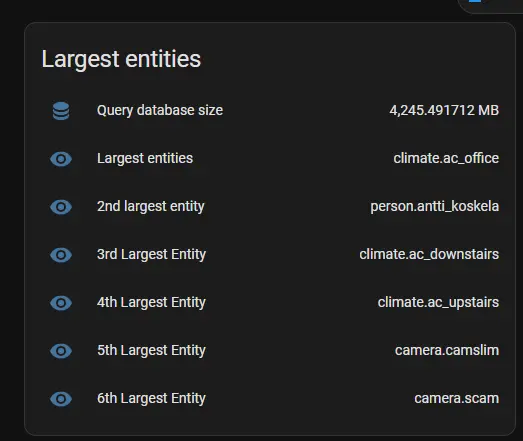

Identifying the largest entities

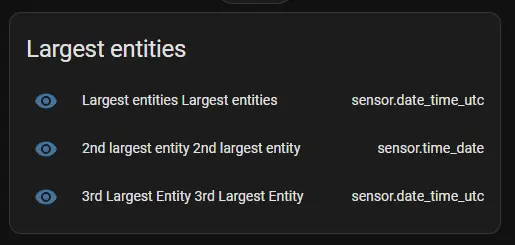

In order to know what to purge manually, I decided to figure out which entities of mine were the biggest.

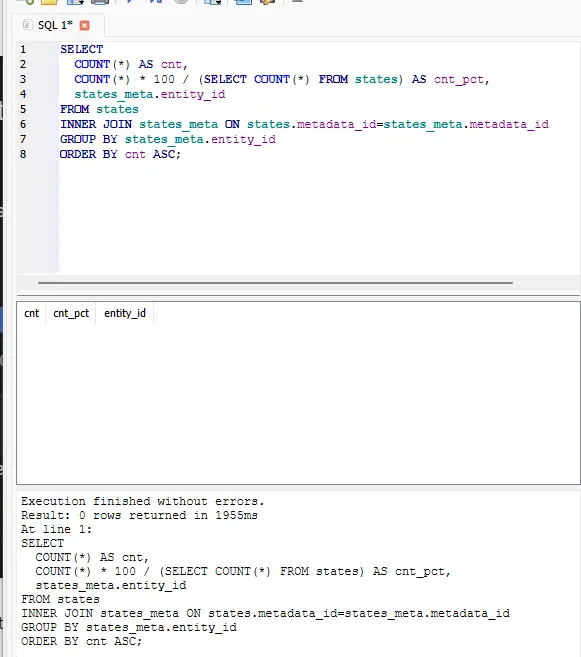

I downloaded my database and opened in DB Browser for SQLite, to run the following SQL:

SELECT

COUNT(*) AS cnt,

COUNT(*) * 100 / (SELECT COUNT(*) FROM states) AS cnt_pct,

states_meta.entity_id

FROM states

INNER JOIN states_meta ON states.metadata_id=states_meta.metadata_id

GROUP BY states_meta.entity_id

ORDER BY cnt ASC;But no matter what I ran, the return value would always be empty.

I figured that DB Browser for SQLite can’t apparently handle a 30-gigabyte database.

Bah. It isn’t even a big database, right?

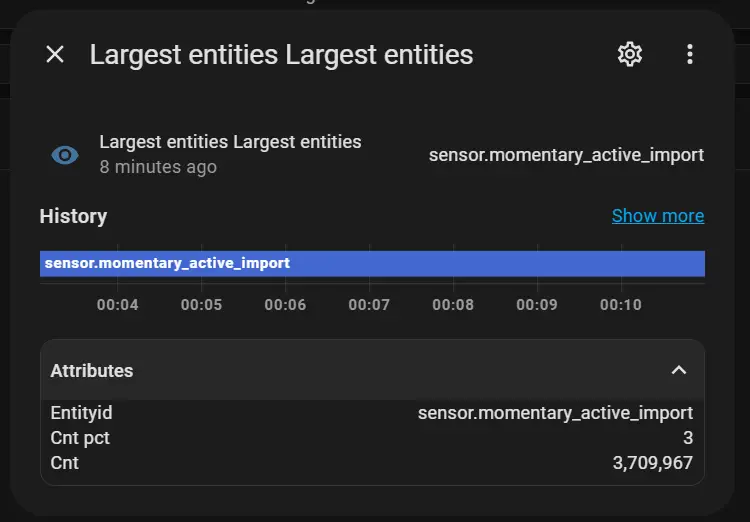

Instead, I decided to use the SQL Integration in Home Assistant. Apparently there is no problem I can’t solve by adding more stuff to Home Assistant!

Unfortunately, I don’t run HASS Supervised, so I can’t run queries (as I don’t have the possibility to set SQL up as an add-on), but what I CAN do is to create new entities for query results…

So new entities I created:

SELECT

states_meta.entity_id as entityid,

COUNT(*) * 100 / (SELECT COUNT(*) FROM states) AS cnt_pct,

COUNT(*) AS cnt

FROM states

INNER JOIN states_meta ON states_meta.metadata_id = states.metadata_id

GROUP BY states.metadata_id

ORDER BY cnt DESC LIMIT 1 OFFSET 0(To get the second largest, change “OFFSET 0” to “OFFSET 1”)

And behold:

… apparently SQL integration will double-name all entities. Ok then. I can always rename them.

But on to analyzing the results – I see my power meter is generating a lot of state changes – namely, momentary_active_import in itself is responsible for 3% of all data in the table, and there are overall a dozen or so entities like it.

After verifying that the data from the power meter sensors is getting funneled into LTS properly, I decided to start purging it.

I cleaned it up using the “Purge” Action in Developer Tools. And nervously opened the entity history…

Phew. It IS working!

Cleanup on entities that will be stored in LTS anyway

So I set out to purge the heaviest of my sensors. I decided to set up automations like below to do this:

alias: "Recorder: Purge energy entities"

action: recorder.purge_entities

metadata: {}

data:

keep_days: 2

entity_globs:

- sensor.momentary_active_*

- sensor.momentary_reactive_*

- sensor.cumulative_active_*

- sensor.cumulative_reactive_*

- sensor.current_phase_*

- sensor.voltage_phase_*

- sensor.electricity_*Alright. That’s something to try.

Firing it off!

Mildly worried, I clicked “Run”, checked the traces, and… It finished without further information.

I quickly realized that’s an asynchronous operation and there won’t be any logging available for it. But admittedly, only after firing it off at least twice.

You live and you learn.

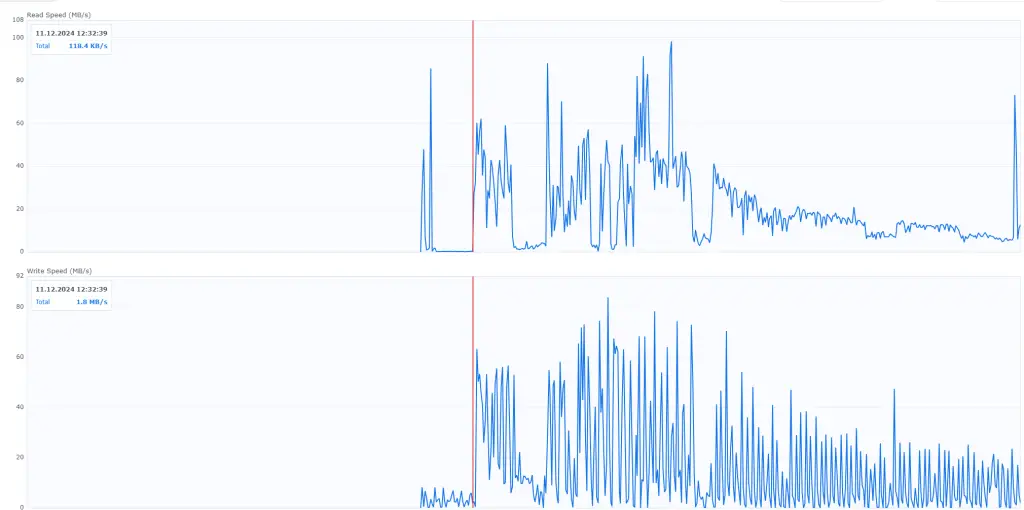

Checking my Synology statistics, both read and write speeds were through the roof – and mostly because of the Home Assistant container as I didn’t have any backups running at the time.

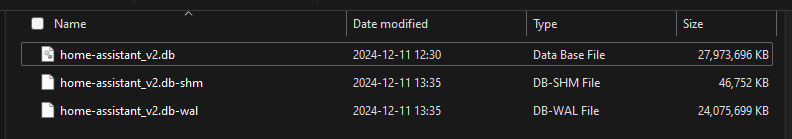

That was certainly doing something! I took a quick look at my database (or the directory in which SQLite had the database files anyway).

In case you’re not familiar with these files, here’s how they work:

- .db file: This is the main SQLite database file.

- It contains the actual data and schema of the database

- .db-shm file: This stands for Shared Memory.

- It’s a temporary file used by SQLite in Write-Ahead Logging (WAL) mode to manage shared memory access. It helps multiple processes to interact with the database efficiently and consistently

- .db-wal file: This stands for Write-Ahead Log.

- In WAL mode, changes are first written to this file before being committed to the main database file.

- This improves performance and concurrency, as readers and writers can access the database simultaneously.

The .db-shm and .db-wal files are normally next-to-nothing in size. So I could see for a fact that the whole database was being rewritten on disk… 😅

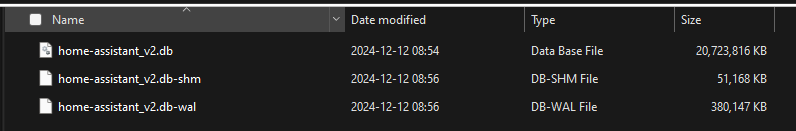

After hours and hours of purging and repacking (yeah, I get now why you don’t repack the database every night or even every week!), it got to this:

Yes, it took until next day!

So after the regular, weekly/biweekly purge & repack, my database was about 28 GBs (which is already an improvement over my earlier numbers).

And after my additional purging, I got it down to measly 20 GBs!

20 GBs. That’s practically nothing! But we can do more…

On to removing more stuff

Since the SQL integration doesn’t easily let me query a list of entityids to one sensor (or let me know if you figure out how to do it!), I instead created a bunch of entities to hold biggest, second biggest, third biggest entity, and so on.

And as my automation and recorded configuration evolved, these entity values would change.

For all the new entities on my list, I got to make the call – remove from recorder or purge faster than the rest of the entities?

And I made that call quite a few times, mostly purging the data, but I did add some limitations to recorder too.

What did I end up with?

At the end of the day, I ended up leaving the recorder’s purge_keep_days to 720, because I like to hold on to the binary_sensors’ data.

Instead, I set limited my recorder’s data intake like this:

recorder:

db_url: sqlite:////db/home-assistant_v2.db

purge_keep_days: 720

commit_interval: 30

exclude:

domains:

# domains to exclude from recording

- updater

- media_player

- weather

- sun

- persistent_notification

entities:

# specific entities to exclude, while the domain may be included

- device_tracker.98_d8_63_34_af_4a

- device_tracker.44_5d_5e_02_22_3e

- sensor.date

- sensor.time_utc

- sensor.time

- sensor.date_time_utc

- sensor.time_date

- sensor.date_time_utc

- sensor.date_time_iso

entity_globs:

# groups of sensors by using a wildcard

- sensor.rain*

- binary_sensor.aimesh_*

- sensor.4g_ac68u*

- device_tracker.esp_*

- device_tracker.lwi*

- sensor.openweathermap_*

- sensor.climacell_*And I carefully set up an automation that runs every night to clean up some data, with very specific numbers of days to keep (simplified a little bit below):

alias: "Home Assistant: Purge Some Entities nightly"

description: This automation purges entities that aren't needed for the full 720 days.

triggers:

- trigger: time_pattern

hours: "3"

minutes: "00"

seconds: "00"

actions:

- alias: >-

Recorder: Purge after 1 day | Entities that shouldn't have been recorded

in the first place

action: recorder.purge_entities

metadata: {}

data:

keep_days: 1

domains:

- updater

- media_player

- weather

- sun

- persistent_notification

entity_id:

- device_tracker.98_d8_63_34_af_4a

- device_tracker.44_5d_5e_02_22_3e

- sensor.date

- sensor.date_time_utc

- sensor.time_date

- sensor.date_time

- sensor.date_time_utc

- sensor.date_time_iso

- sensor.time_utc

- sensor.time

entity_globs:

- sensor.rain*

- binary_sensor.aimesh_*

- sensor.4g_ac68u*

- device_tracker.esp_*

- device_tracker.lwi*

- sensor.openweathermap_*

- sensor.climacell_*

- device_tracker.wifi*

- alias: >-

Recorder: Purge after 2 days | Entities that are mostly used immediately,

not for long term (and numericals stay in history anyway)

action: recorder.purge_entities

metadata: {}

data:

keep_days: 2

entity_globs:

- sensor.momentary_active_*

- sensor.momentary_reactive_*

- sensor.cumulative_active_*

- sensor.cumulative_reactive_*

- sensor.current_phase_*

- sensor.voltage_phase_*

- sensor.electricity_*

- sensor.sonoff_*_rssi

- sensor.monthly_energy_*

- sensor.daily_energy_*

- sensor.energy_*

- sensor.boiler_hours_*

- sensor.formaldehyde_detector_*

- sensor.xe75pro_*

- sensor.energy_usage_grid_consumption_today

- sensor.energy

- sensor.query_database_size

- binary_sensor.ha_excesselectricityavailable_*

- binary_sensor.ha_excesssolaravailable_*

- sensor.estimated_illuminance

- alias: >-

Recorder: Purge after 7 days | Useful stuff that will be in history

anyway

action: recorder.purge_entities

metadata: {}

data:

keep_days: 7

entity_id:

- sensor.temperature_mudroom_actual

- sensor.average_temp_house_living_spaces

- sensor.tb_x103f_battery_level

entity_globs:

- sensor.sonoff_*

- input_number.temperature_target*

- sensor.temperature_target_*

- sensor.target_temperature_*

- sensor.average_temperature_*

- sensor.average_humidity_*

- sensor.temperature_offset_*

- sensor.8_8_8_*

- sensor.green_house_*

- sensor.hygro_*

- sensor.ac_*

- alias: >-

Recorder: Purge after 3 months | Interesting stuff that's not stored in

LTS, like camera data and device locations

action: recorder.purge_entities

metadata: {}

data:

entity_id:

- camera.camslim

- camera.scam

keep_days: 90

domains:

- camera

entity_globs:

- device_tracker.sm_*

- device_tracker.lenovo_tab_2

- alias: >-

Recorder: Purge after a year | Interesting but not saved in history, like

old climate data

action: recorder.purge_entities

metadata: {}

data:

keep_days: 365

domains:

- climate

- person

- device_tracker

entity_globs:

- binary_sensor.sonoff_*

- alias: "Recorder: Purge other old data after 30 days"

action: recorder.purge_entities

metadata: {}

data:

keep_days: 30

entity_globs:

- automation.*

- sensor.tb_x103f_*

- sensor.largest_entit*

- sensor.*_largest_entit*

- alias: "Recorder: Repack"

action: recorder.purge

metadata: {}

data:

keep_days: 720

repack: true # Change this unless you like a lot of writes on your db disk!

enabled: true

mode: single

And I set up a couple additional entities, so that I can review a bunch of biggest entities instead of just 3.

Alright. So with all this setup, what have I achieved?

End results

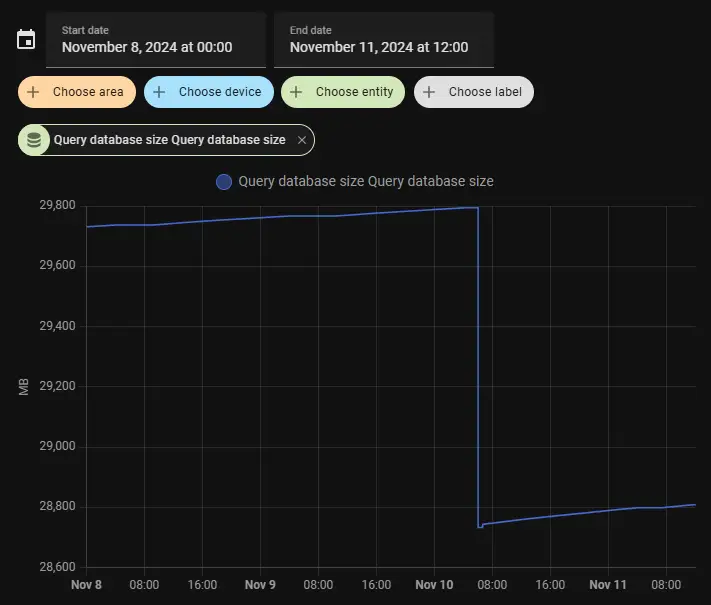

Finally, this is the database size I ended up with:

About 4.5Gbs. Miniscule and easy to handle by my hardware, but of course I’ll need to work on the cleanup automation in the future too.

And yes, I am aware that some people have Home Assistant databases that are in the Megabyte range. I have no desire to be one of those people 😂

That said, feedback and comments are accepted! 😅

(I won’t be taking referrals to therapy for hoarding disorder though)

References

I used a lot of materials to get where I am now. Here are the best ones:

- https://community.home-assistant.io/t/steps-to-reduce-write-cycles-and-extend-sd-ssd-life-expectancy/291718

- https://community.home-assistant.io/t/taming-the-recorder/271932

- https://community.home-assistant.io/t/how-to-reduce-your-database-size-and-extend-the-life-of-your-sd-card/205299

- https://community.home-assistant.io/t/how-to-keep-your-recorder-database-size-under-control/295795